- #Artoolkit tutorial 2016 movie#

- #Artoolkit tutorial 2016 install#

- #Artoolkit tutorial 2016 drivers#

- #Artoolkit tutorial 2016 download#

- #Artoolkit tutorial 2016 free#

This can be achieved by running OGRE and ARToolkit in two separate threads. The challenge here is to supply the ARToolkit’s transformation matrix to OGRE’s graphics object. I started out by first downloading one of the tutorial applications in OGRE. Let’s see the steps involved in ARToolkit & OGRE briefly. By combining ARToolkit with OGRE we can track a marker real-time and apply its pose (position & orientation) to a graphics object, which is being rendered by OGRE. It’s capable of tracking a predefined marker at real-time and calculate the pose at frame rate.

It’s a set of C++ libraries and header files that facilitates in creating marker-based AR applications. I know it’s quite lame (for pro’s ) but let me introduce the ARToolkit a little bit. It’s up to the developer to choose which rendering method to go with. Modify this file to set the desired rendering method. Usually this file resides in the bin\debug directory of OGRE installation-in my case it’s C:\OgreSDK\OgreSDK_vc10_v1-7-4\bin\debug. You can find a file called ogre.cfg getting created when you run an OGRE application for the first time.

#Artoolkit tutorial 2016 drivers#

Both OpenGL and Direct3D communicate closely with the underlying video graphics drivers in order to render the output. Generally, OGRE provides two options for rendering graphics – i.e. It’s capable of drawing 3D graphics as well as animations in a window. It’s in the OGRE’s render window that we display both the camera background and the 3D graphic. The second one was to set the background of the OGRE’s render window into the camera view. The first one was aligning the pose of the marker accurately with the graphic object.

#Artoolkit tutorial 2016 free#

Of course, there are dozens of different free and more commercial systems, but for now, I only wanted to give you a brief and free impression, of what’s easily possible.This application was tested in a Win32 platform. So if you are interested in learning more about augmented reality and starting to program your own application, continue reading on the ARToolkit website for tutorials or go to the Studierstube to get the ARToolkit Plus, that might be more comfortable or convenient for you. This last demo ships with all the C source code. As you move around the pattern, the 3D objects will move along.

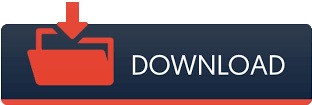

This printed square will be recognised as the anchor, where the program will put the graphics. you have to hold a black/white pattern into the camera’s view. ( This demo uses marker based tracking, i.e. You will see a bee buzzing above a flower – on your hand! Now print the file ARToolKit\patterns\pattHiro.pdf and start the program simpleVRML.

#Artoolkit tutorial 2016 download#

If you run Windows, copy this file into the unpacked folder ARToolKit\bin or download the OpenGL GLUT files from the web and copy them to your folder. Try one of the other demos, that are shipped along.)ĭownload the ARToolkit package from and unpack it. If you have no clue, what that means, don’t worry.

The whole tool can do much more and you can even import your own VRML files. In this case this is the castle image you printed. ( This demo uses feature based tracking, i.e. Now hold the printed paper into the view! Go to File > Open and select examples > scenes > knights&dragons > DemoScene_Knights&Dragons.scef. At the bottom of the screen you will see video play controls (rwd, play, stop, fwd), right next to those you’ll have three vertically aligned buttons: click the lower one and then select your webcam on the right. Now start the Unifeye Design Demo link on your desktop. After installing, go to the subfolder (probably:) C:\Program Files\metaio\Unifeye Design Demo\examples\scenes\Knights&Dragons and print the file Drache_Marker_1.jpg. Go to and download the Unifeye Design Demo Installer (Windows only). But that one you’ll need, since the face recognition will look for two eyeballs, one nose, etc…) Nothing else is needed besides your human looks. ( This demos uses face recognition and tracking. After some loading you will see your webcam image as a live feed in your browser and when you keep your face inside the camera, you’ll be automatically transformed into an Autobot from Transformers!

#Artoolkit tutorial 2016 install#

Go to and install the plug-in, when asked.

#Artoolkit tutorial 2016 movie#

During this rainy summer I figured, there could be the perfect moment for you to get started with augmented reality, too! You have seen the demos on youtube? You were intrigued by movie effects? But how could you try it out easily and – for now – without any skills in augmented reality or programming? Here is the answer below! Try it out! All you need is a neat webcam or even a crappy one and then try one or all of the following demos to get a first impression yourself! No movie, but all real-time in your hands!

0 kommentar(er)

0 kommentar(er)